Background

Cameras traditionally capture a two dimensional projection of a scene. However depth information is important for many real-world application including robotic navigation, face recognition, gesture or pose recognition, 3D scanning, and self-driving cars. The human visual system can preceive depth by comparing the images captured by our two eyes. This is called stereo vision. In this project we will experiment with a simple computer vision/image processing technique, called "shape from stereo" that, much like humans do, computes the depth information from stereo images (images taken from two locations that are displaced by a certain amount).

Depth Perception

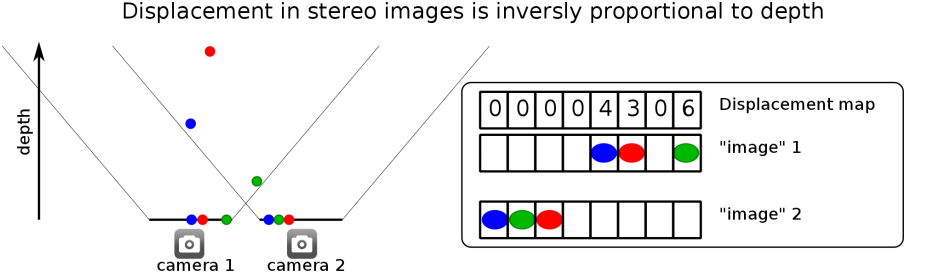

Humans can tell how far away an object is by comparing the position of the object is in left eye's image with respect to right eye's image. If an object is really close to you, your left eye will see the object further to the right, whereas your right eye will see the object to the left. A similar effect will occur with cameras that are offset with respect to each other as seen below.

The above illustration shows 3 objects at different depth and position. It also shows the position in which the objects are projected into image in camera 1 and camera 2. As you can see, the closest object (green) is displaced the most (6 pixels) from image 1 to image 2. The furthest object (red) is displaced the least (3 pixels). We can therefore assign a displacement value for each pixel in image 1. The array of displacements is called displacement map. The figure shows the displacement map corresponding to image 1.

Your task will be to find the displacement map using a simple block matching algorithm. Since images are two dimensional we need to explain how images are represented before going to describe the algorithm.

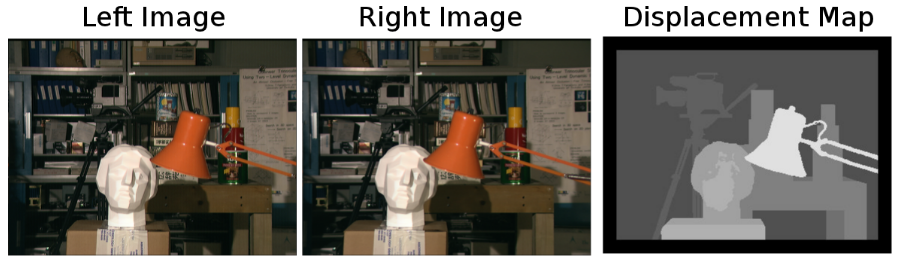

Below is a classic example of left-right stereo images and the displacement map shown as an image.

Part a

Objective

In this project, we will attempting to simulate depth perception on the computer, by writing a program that can distinguish far away and close by objects.

Getting started

The files you will need to modify and submit are:

· calc_depth.c: Creates a depth map out of two images. You will be implementing the calc_depth() function.

· make_qtree.c: Creates a quadtree representation from a depth map. You will be implementing the depth_to_quad(), homogenous() and free_qtree() functions.

You are free to define and implement additional helper functions, but if you want them to be run when we grade your project, you must include them in calc_depth.c or make_qtree.c. Changes you make to any other files will be overwritten when we grade your project.

The rest of the files are part of the framework. It may be helpful to look at all the other files.

· Makefile: Defines all the compilation commands.

· depth_map.c: Loads bitmap images and calls the calc_depth() to calculate the depth map.

· calc_depth.h: Defines the signature for the calc_depth() function you will implement.

· make_qtree.h: Defines the signature for the depth_to_quad() and homogenous() functions you will implement.

· quadtree.h: Defines the qNode struct, as well as quadtree.c function headers.

· quadtree.c: Calls depth_to_quad() and free_qtree().

· utils.h: Defines Image struct and utility function signatures.

· utils.c: Defines bitmap loading, printing, and saving functions.

· test/: Contains the files necessary for testing. images/ holds input images, output will contain output files created by the program, and expected/ has the correct output of the tests. cunit contains code to help with unit testing.

Task

Your first task will be to implement the depth map generator. This function takes two input images (unsigned char *left and unsigned char *right), which represent what you see with your left and right eye, and generates a depth map using the output buffer we allocate for you (unsigned char *depth_map).

Generating a depth map

In order to achieve depth perception, we will be creating a depth map. The depth map will be a new image (same size as left and right) where each "pixel" is a value from 0 to 255 inclusive, representing how far away the object at that pixel is. In this case, 0 means infinity, while 255 means as close as can be. Consider the following illustration:

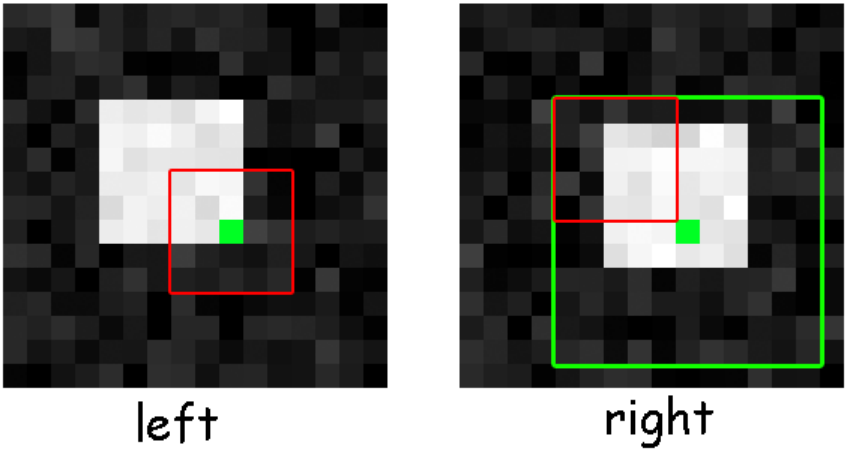

The first step is to take a small patch (here 5×5) around the green pixel. This patch is represented by the red rectangle. We call this patch a feature. To find the displacement, we would like to find the corresponding feature position in the other image. We can do that by comparing similarly sized features in the other image and choosing the one that is the most similar. Of course, comparing against all possible patches would be very time consuming. We are going to assume that there's a limit to how much a feature can be displaced — this defines a search space which is represented by the large green rectangle (here 11×11). Notice that, even though our images are named left and right, our search space extends in both the left/right and the up/down directions. Since we search over a region, if the "left image" is actually the right and the "right image" is actually the left, proper distance maps should still be generated.

The feature (a corner of a white box) was found at the position indicated by the blue square in the right image.

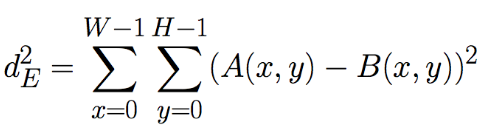

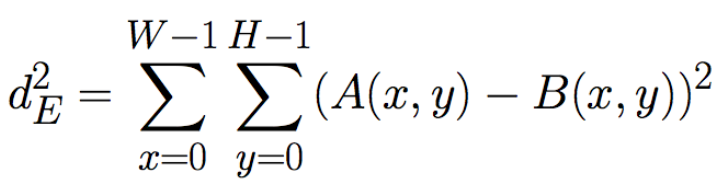

We'll say that two features are similar if they have a small Squared Euclidean Distance. If we're comparing two features, A and B, that have a width of W and height of H, their Squared Euclidean Distance is given by:

(Note that this is always a positive number.)

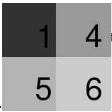

For example, given two sets of two 2×2 images below:

← Squared Euclidean distance is (1-1)2+(5-5)2+(4-4)2+(6-6)2 = 0 →

← Squared Euclidean distance is (1-1)2+(5-5)2+(4-4)2+(6-6)2 = 0 →

← Squared Euclidean distance is (1-3)2+(5-5)2+(4-4)2+(6-6)2 = 4 →

← Squared Euclidean distance is (1-3)2+(5-5)2+(4-4)2+(6-6)2 = 4 →

(Source: http://cybertron.cg.tu-berlin.de/pdci08/imageflight/descriptors.html)

Once we find the feature in the right image that's most similar, we check how far away from the original feature it is, and that tells us how close by or far away the object is.

Definitions (Inputs)

We define these variables to your function:

· image_width

· image_height

· feature_width

· feature_height

· maximum_displacement

We define the variables feature_width and feature_height which result in feature patches of size: (2 × feature_width + 1) × (2 × feature_height + 1). In the previous example, feature_width = feature_height = 2 which gives a 5×5 patch. We also define the variable maximum_displacement which limits the search space. In the previous example maximum_displacement = 3 which results in searching over (2 × maximum_displacement + 1)2 patches in the second image to compare with.

Definitions (Output)

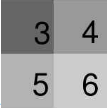

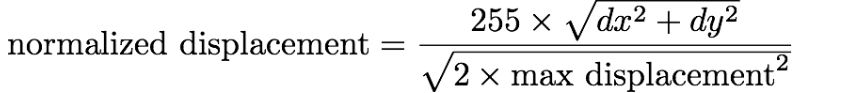

In order for our results to fit within the range of a unsigned char, we output the normalized displacement between the left feature and the corresponding right feature, rather than the absolute displacement. The normalized displacement is given by:

This function is implemented for you in calc_depth.c.

In the case of the above example, dy=1 and dx=2 are the vertical and horizontal displacement of the green pixel. This formula will guarantee that we have a value that fits in a unsigned char, so the normalized displacement is 255 × sqrt(1 + 22)/sqrt(2 × 32) = 134, truncated to an integer.

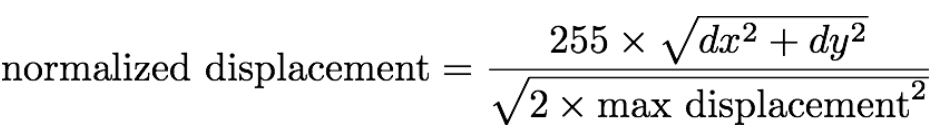

Bitmap Images

We will be working with 8-bit grayscale bitmap images. In this file format, each pixel takes on a value between 0 and 255 inclusive, where 0 = black, 255 = white, and values in between to various shades of gray. Together, the pixels form a 2D matrix with image_height rows and image_width columns.

Since each pixel may be one of 256 values, we can represent an image in memory using an array of unsigned char of size image_width * image_height. We store the 2D image in a 1D array such that each row of the image occupies a contiguous part of the array. The pixels are stored from top-to-bottom and left-to-right (see illustration below):

(Source: http://cnx.org/content/m32159/1.4/rowMajor.png)

(Source: http://cnx.org/content/m32159/1.4/rowMajor.png)

We can refer to individual pixels of the array by specifying the row and column it's located in. Recall that in a matrix, rows and columns are numbered from the top left. We will follow this numbering scheme as well, so the leftmost red square is at row 0, column 0, and the rightmost blue square is at row 2, column 1. In this project, we will also refer to an element's column # as its x position, and it's row # as its y position. We can also call the # of columns of the image as its image_width, and the # of rows of the image as its image_height. Thus, the image above has a width of 2, height of 3, and the element at x=1 and y=2 is the rightmost blue square.

Your task

Edit the function in calc_depth.c so that it generates a depth map and stores it in unsigned char *depth_map, which points to a pre-allocated buffer of size image_width × image_height. Two images, leftand right are provided. They are also of size image_width × image_height. The feature_width and feature_height parameters are described in the Generating a depth map section.

Here are some implementation details and tips:

· A feature is a box of width 2 × feature_width + 1 and height 2 × feature_height + 1, with the original position of the pixel at its center.

· You may not assume feature_height = feature_width = maximum_displacement. They may all be different (e.g. your feature box may be a rectangle).

· Pixels on the edge of the image, whose left-image features don't fit inside the image, should have a normalized displacement of 0 (infinite).

· When maximum_displacement is 0, the whole image would have a normalized displacement of 0.

· Your algorithm should not consider right-image features that lie partially outside the image area. However, if the left-image feature of a pixel is fully within the image area, you should always be able to assign a normalized displacement to that pixel.

· The source pixels always come from unsigned char *left, whereas the unsigned char *right image is always the one that is scanned for nearby features.

· You may not assume that unsigned char *depth_map has been filled with zeros.

· You may not store global variables that persist between multiple calls to calc_depth.

· The Squared Euclidean Distance should be calculated according to the formula:

· After finding the matching feature in the right image with the smallest Squared Euclidean Distance, the normalized displacement of the pixel is given by the formula:

· Ties in the Euclidean Distance should be won by the one with the smallest resulting normalized displacement.

· Some test cases are provided by make check. These are not all of the tests that we will be grading your project on.

Advice

· Try to think about how to marginalize the problem before starting. How do you select the closest portion of a picture? How do you evaluate how close two feature spaces are? How do you determine the distance between two pixels?

· If you get stuck try testing a portion with the CUnit tests (see the testing section below).

· Step through the unit tests in cgdb rather than a whole program.

· If you find yourself stuck or not passing tests doublecheck what the spec asks you to do. It impossible to write effective tests if you get the expected results wrong.

Task B

Your second task will be to implement quadtree compression. This function takes a depth map (unsigned char *depth), and generates a recursive data structure called a quad tree.

Quadtree Compression

The depth maps that we create in this project are just 2D arrays of unsigned char. When we interpret each value as a square pixel, we can output a rectangular image. We used bitmaps in this project, but it would be incredibly space inefficient if every image on the internet were stored this way, since bitmaps store the value of every pixel separately. Instead, there are many ways to compress images (ways to store the same image information with a smaller filesize). In task B, you will be asked to implement one type of compression using a data structure called a quadtree.

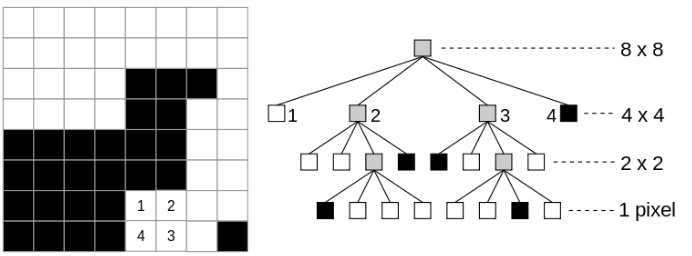

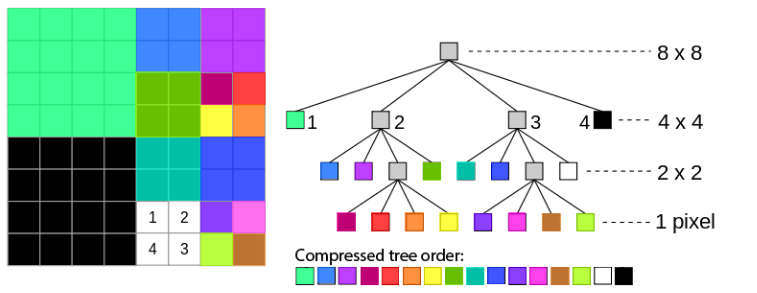

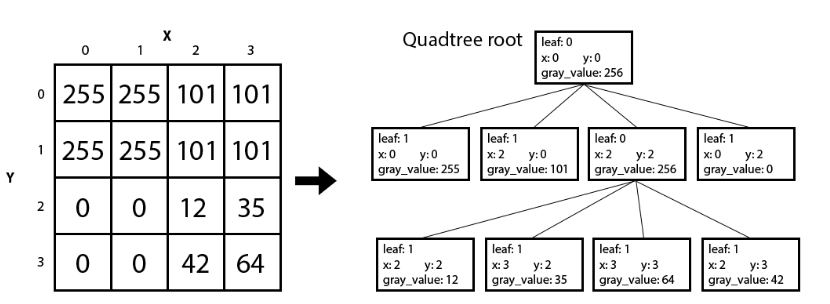

A quadtree is similar to a binary tree, except each node must have either 0 children or 4 children. When applied to a square bitmap image whose width and height can be expressed as 2N, the root node of the tree represents the entire image. Each node of the tree represents a square sub-region within the image. We say that a square region is homogenous if its pixels all have the same value. If a square region is not homogenous, then we divide the region into four quadrants, each of which is represented by a child of the quadtree parent node. If the square region is homogeneous, then the quadtree node has no children and instead, has a value equal to the color of the pixels in that region.

We continue checking for homogeneity of the image sections represented by each node until all quadtree nodes contain only pixels of a single grayscale value. Each leaf node in the quadtree is associated with a square section of the image and a particular grayscale value. Any non-leaf node will have a value of -1 (outside the grayscale range) associated with it, and should have 4 child nodes.

(Source: http://en.wikipedia.org/wiki/File:Quad_tree_bitmap.svg)

We will be numbering each child node created (1-4) clockwise from the top left square, as well with their ordinal direction (NW, NE, SE, SW). When parsing through nodes, we will use this order: 1: NW, 2: NE, 3: SE, 4: SW.

Given a quadtree, we can choose to only keep the leaf nodes and use this to represent the original image. Because the leaf nodes should contain every color value represented, the non-leaf nodes are not needed to reconstruct the image. This compression technique works well if an image has large regions with the same grayscale value (artificial images), versus images with lots of random noise (real images). Depth maps are a relatively good input, since we get large regions with similar depths.

Your Job

Your task is to write the depth_to_quad(), homogenous() and free_qtree() functions located in make_qtree.c. The first function, depth_to_quad() takes an array of unsigned char, converts it into a quadtree, and returns a pointer to the root qNode in the tree. Keep in mind that local variables don't last after your function returns, so you must use dynamic memory allocation in the function. Since memory allocation could fail, you need to check whether the pointer returned by malloc() is valid or not. If it is NULL, you should call allocation_failed() (defined in utils.h).

Your representation should use a tree of qNodes, all of which either have 0 or 4 children. The declaration of the struct qNode is in quadtree.h.

The second function homogenous() takes in the depth_map as well as a region of the image (top left coordinates, width, and height). If every pixel in that region has the same grayscale value, then homogenous()should return that value. Or else, if the section is non-homogenous, it should return -1.

The third function free_qtree() should take in the root of a qtree and should free all the memory associated with that tree. Since any node is itself the root of a subtree the root passed in just needs to be a malloced node, not the necessarily the root of the original tree.

Here are a few key points regarding your quadtree representation:

1. Leaves should have the boolean value leaf set to true, while all other nodes should have it as false.

2. The gray_value of leaves should be set to their grayscale value, but non-leaves should take on the value -1.

3. The x and y variables should hold the top-left pixel coordinate of the image section the node represents.

4. We only require that your code works with images that have widths that are powers of two. This means that all qNode sizes will also be powers of two and the smallest qNode size will be one pixel.

5. The four child nodes are marked with ordinal directions (NW, NE, SE, SW), which you should match closely to the corresponding sections of the image.

6. Some test cases are provided by make check. These are not all of the tests that we will be grading your project on.

7. Don't worry about NULL images or images of size zero, we won't test for these (but you're welcome to have a check for it anyways and return null)

8. Your final code needs to have no memory leaks. Make sure your free_qtree() free the entire subtree associated with a root.

9. Your may not assume that the pointer passed in to free_qtree() is not NULL.

The following example illustrates these points:

Turning a matrix into a quadtree.

Turning a matrix into a quadtree.

You can compile your code for task B with the following command:

Advice

· Try segmenting the problem. First construct the quadtree and then try including compression.

· If you find yourself stuck add CUnit tests (see testing).

· Run valgrind to make sure your code has no memory leaks. You should figure out how to do this from task C.

Task C

The final task is a small exercise intended to teach you have to use valgrind. From lecture and lab you should have seen that memory that is allocated and not freed results in a memory leak. One particularly useful piece of software for detecting memory leaks is valgrind and it is already installed on the hive. If you learn how to use valgrind you can quickly detect many memory errors that occur (not just memory leaks). Unfortunately many students do not realize how powerful valgrind can be and so this exercise is intended to assist you in learning to use valgrind and to approach documentation in general. In the depth_map program there exists exactly 1 memory leak in the starter code. Your task is to find the memory leak and develop a solution. When you find the solution you will edit leak_fix.py with the location of the leak and the line of c code needed to fix it. For example if there a file called example.c which had a memory leak that could be solved by freeing the variable weezy right before line 15, then you would fill the python file to contain.

filename = "example.c"

linenum = 15

line = "free (weezy);"

We will insert the line you specified in the file you specified via a script. You only need to supply a working line number (not any particular line number). The line you insert should be a valid line of C code. This exercise is not meant to be difficult but is intended to get you to explore learning how to use testing software from documentation. It is of course possible to brute force but it will defeat the purpose and most likely take longer. Because of these goals we will have the following rules:

· You may not share any information about the file to check, the line number, or the variable that needs to be freed.

· You may not post any information about the proper way to use valgrind to find the memory leak. Half this task is about learning how to read documentation.

Debugging and Testing

Your code is compiled with the -g flag, so you can use CGDB to help debug your program. While adding in print statements can be helpful, CGDB can make debugging a lot quicker, especially for memory access-related issues. While you are working on the project, we encourage you to keep your code under version control via your github classroom account.

In addition, we have included a few functions to help make development and debugging easier:

· print_image(const unsigned char *data, int width, int height): This function takes in an array of pixels and prints their values in hex to standard output.

· save_image(char *filename, const unsigned char *data, int width, int height): This function takes in an array of pixels and saves them to a new bmp file at a specified filename.

· print_qtree(qNode *qtree_root): This function takes in a qNode and prints out the quadtree.

· print_compressed(qNode *qtree_root): This function takes in a qNode and prints out the compressed representation of the quadtree.

The test cases we provide you are not all the test cases we will test your code with. You are highly encouraged to write your own tests before you submit. Feel free to add additional tests into the skeleton code, but do not make any modifications to function signatures or struct declarations. This can lead to your code failing to compile during grading.

代写CS&Finance|建模|代码|系统|报告|考试

编程类:C代写,JAVA代写 ,数据库代写,WEB代写,Python代写,Matlab代写,GO语言,R代写

金融类:统计,计量,风险投资,金融工程,R语言,Python语言,Matlab,建立模型,数据分析,数据处理

服务类:Lab/Assignment/Project/Course/Qzui/Midterm/Final/Exam/Test帮助代写代考辅导

天才写手,代写CS,代写finance,代写statistics,考试助攻

E-mail:[email protected] 微信:BadGeniuscs 工作时间:无休息工作日-早上8点到凌晨3点

如果您用的手机请先保存二维码到手机里面,识别图中二维码。如果用电脑,直接掏出手机果断扫描。