Classification Assignment

Project Description

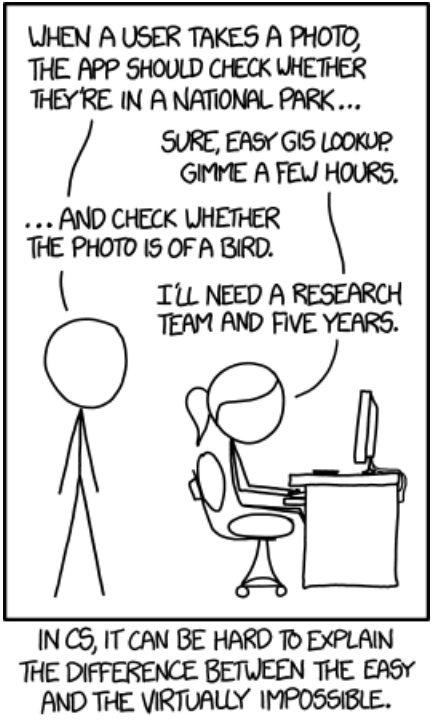

The goal for this project is to build a classifier that can distinguish between pictures of birds and pictures of non-birds. The training and testing data for this task is adapted from CIFAR-10 and CIFAR-100.

http://www.cs.toronto.edu/~kriz/cifar.html

These are widely used computer vision data sets that together contain 120,000 labeled images drawn from 110 different categories.

The subset of images that we will be working with contains 10,000 labeled training images. Half of these are images of birds while the other half have been randomly selected from the remaining 109 image categories.

Here are some examples of images that contain birds:![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Here are some examples of images that do not contain birds: ![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

The data can be downloaded from the project github page. You will submit your labels through the project Kaggle page for evaluation.

Requirements

For full credit you must apply at least three different learning algorithms to this problem and provide a comparison of the results. You do not need to implement all three algorithms from scratch. There are a number of mature machine learning libraries available for Python. The most popular is:

· http://scikit-learn.org/stable/

You do need to provide your own implementation of at least one learning algorithm for this problem. You are welcome to use the single-layer neural network that we worked on as an in-class exercise, or you may implement something else if you prefer.

For full credit, you must achieve a classification rate above 80%.

You must submit your completed Python code along with a README that includes clear instructions for reproducing your results.

Along with your code, you must also submit a short (2-3 page) report describing your approach to the problem and your results. Your report must include results for all three algorithms. Your report will be graded on the basis of content as well as style. Your writing should be clear, concise, well-organized, and grammatically correct. Your report should include at least one figure illustrating your results.

Suggestions

· Since you can only upload a few Kaggle submissions per day, it will be critical that you use some sort of validation to tune the parameters of your algorithms.

· The input data is stored as 8-bit color values in the range 0-255. Many learning algorithms are sensitive to the scaling of the input data, and expect the values to be in a more reasonable range, like [0, 1], [-1, 1], or centered around zero with unit variance. The following would be a simple first step:

import numpy as np train_data = np.load('data/train_data.npy') # load all the training data train_data = train_data / 255.0 # now it is in the range 0-1

· State-of-the-art solutions for tasks like this are based on convolutional neural networks. The easiest library to get started with is probably keras. (https://keras.io/)Keras isn't installed on the lab machines, but you should be able to install it into your account using the following commands:

pip install --user wheel pip install --user tensorflow-gpu==1.4 export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/usr/local/cuda-8.0/targets/x86_64-linux/lib/"

This installs Tensorflow, which includes Keras. The file keras_example.py shows an example of using Keras to create a simple three-layer neural network.

· Performing learning directly on the 3072 dimensional image vectors will be very computationally expensive for some algorithms. It may be beneficial to perform some sort of feature extraction(https://en.wikipedia.org/wiki/Feature_extraction)prior to learning. This could be something as simple as rescaling the images from 32×32 pixels (3072 dimensions) down to 4×4 pixels (48 dimensions):

import numpy as np import scipy.misc from matplotlib import pyplot as plt train_data = np.load('data/train_data.npy') # load all the training data img = train_data[0, :] # grab the 0th image img = img.reshape(32, 32, 3) # "unflatten" the image small_img = scipy.misc.imresize(img, (4, 4, 3)) # rescale the image

· Some algorithms may benefit from data augmentation. The idea behind data augmentation is to artificially increase the size of the training set by introducing modified versions of the training images. The simplest example of this would be to double the size of the training set by introducing a flipped version of each image.

以上发布均为题目,为保证客户隐私,源代码绝不外泄!!)

代写计算机编程类/金融/高数/论文/英文

本网站支持淘宝 支付宝 微信支付 paypal等等交易。如果不放心可以用淘宝或者Upwork交易!

E-mail:[email protected] 微信:BadGeniuscs 工作时间:无休息工作日-早上8点到凌晨3点

如果您用的手机请先保存二维码到手机里面,识别图中二维码。如果用电脑,直接掏出手机果断扫描。