Statistics 305a

Statistics 305a代写 What do cross-validation and the Cp statistic both try and achieve. What are the assumptions underlying each,

1.(4 pts)

What do cross-validation and the Cp statistic both try and achieve. What are the assumptions underlying each, and in what way do they fail?

2.(3 pts)

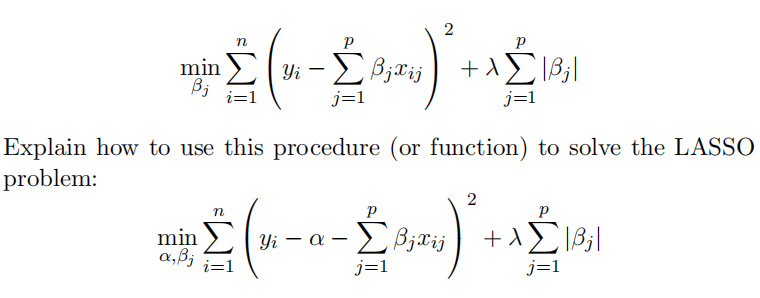

Suppose you are given a computational procedure that can solve the following problem for you:

3.(5 pts) Statistics 305a代写

In linear regression, every time you include an additional pre-dictor, the residual sum of squares on the training data is monotone nonincreasing.

(a) Give a simple argument why this is true

(b) You start with the mean model and get RSS₀ = 98. You include X₁ and get RSS₁= 96. Unhappy with this, you leave out X₁, and put in X₂, and get RSS₂ = 97. Totally disgusted, you dump them both in the model, and are flflabbergasted to fifind RSS₁₂ = 63. Is this possible? Explain (via arguments or sketches or both).

5.(4 pts) Statistics 305a代写

Two biologists working on difffferent experiments compare their success with regression. Biologist 1 has an R2 of 90% using fewer variables than Biologist 2, who has an R2 of only 55%. Discuss such comparisons, and describe a scenario in which Biologist 2 is actually doing better.

6.(5 pts) Statistics 305a代写

Suppose my p = 256 predictors for each observation (pieces of cod meat) are actually values of a NIR spectrum sampled at 256 uniformly spaced frequencies. I only have 200 samples of fifish, each with such an xᵢ ∈ Rᵖ and a response yᵢ ∈ R¹ which is the percentage of fat in the sample. In order to reduce the complexity of my fifit, I decide to constrain the least squares coeffiffifficients to be equal in blocks of 16 — i.e. β₁ = β ₂ = · · · = β₁₆ , β₁₇ = β₁₈= · · · = β₃₂, and so on. How would you fifit such a model?

7.(2 pts) Statistics 305a代写

Consider forward stepwise regression vs backward stepwise re-gression. Forward stepwise starts with nothing in the model, and at each step includes the variable that reduces the residual-sum-of-squares the most. Similar to forward, in backward we start offff at the full model, and drop the variable that increases the residual-sum-of-squares the least.

(a) Give one advantage of forward over backward.

(b) Give one advantage of backward over forward.

8.(3 pts) Statistics 305a代写

Suppose that an investigator fifinds that the risk of breast cancer B in an observational study is correlated strongly with dietary fat intake. He throws “total consumption of saturated fats” into the model and fifinds that the parameter estimate is signifificant. However, when he adds “total consumption of unsaturated fats” into the regression as well, its parameter estimate is not signifificant. He then concludes that saturated fats are dangerous, but not unsaturated fats. Comment and give any suggestions for more informative studies.

9.(4 pts) Statistics 305a代写

In a linear regression, suppose σ² = 1 and n = 100. You have a choice of two models:

Model 1. number of predictors p = 3, residual sum of squares =140

Model 2. number of predictors p = 45, residual sum of squares =115

Which model would you choose? Justify your answer.

其他代写:homework代写 Exercise代写 加拿大代写 essay代写 作业代写 CS代写 北美作业代写 assignment代写 analysis代写 code代写 assembly代写 英国代写 Data Analysis代写 data代写 澳大利亚代写 app代写 algorithm代写 作业加急 北美代写

合作平台:essay代写 论文代写 写手招聘 英国留学生代写