UNIVERSITY COLLEGE LONDON

Engineering Sciences代写 Assignment Release Date: 2nd October 2019Assignment Hand-in Date: 16th October 2019 at 11.55amFormat: Problems

Faculty of Engineering Sciences

Department of Computer Science

COMP0036: LSA – Assignment 2

Dr. Dariush Hosseini (dariush.hosseini@ucl.ac.uk)

Overview Engineering Sciences代写

- Assignment Release Date: 2nd October2019

- AssignmentHand-in Date: 16th October 2019 at 55am

- Format:Problems

Guidelines

- You should answer all THREE

- Note that not all questions carry equal

- You should submit your final report as a pdf using Turnitin accessed via themodule’s Moodle page.

- Within your report you should begin each question on a newpage.

- You should preface your report with a single page containing, on twolines:

- The module code:‘COMP0036’

- The assignment title: ‘LSA – Assignment2’

- Your report should be neat andlegible.Engineering Sciences代写

You are strongly advised to use LATEX to format the report, however this is not a hard requirement, and you may submit a report formatted with the aid of another system, or even a handwritten report, provided that you have converted it to a pdf (see above).

- Please attempt to express your answers as succinctly as succinctly as possible.

- Please note that if your answer to a question or sub-question is illegible orincomprehen- sible to the marker then you will receive no marks for that question or sub-question.Engineering Sciences代写

- Please remember to detail your working, and state clearly any assumptions which you make.

- Failure to adhere to any of the guidelines may result in question-specific deduction of marks. If warranted these deductions may be punitive.

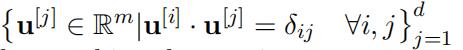

- Assumean unlabelled dataset,

, with sample mean,

, with sample mean,  , and an orthonormal basis set

, and an orthonormal basis set ,where d < m.

,where d < m.

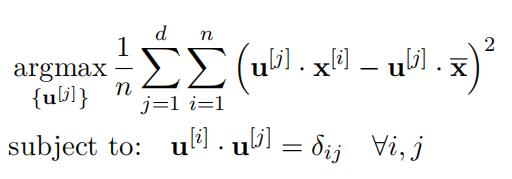

We have investigated the ‘Projected Variance Maximisation’ approach to PCA in which we are interested in finding the d-dimensional subspace spanned by

for which the sum of the sample variance of the data projected onto this subspace is max-imised.

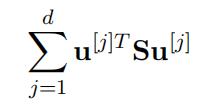

This leads to a formulation of the PCA problem as:

(1)

(1)

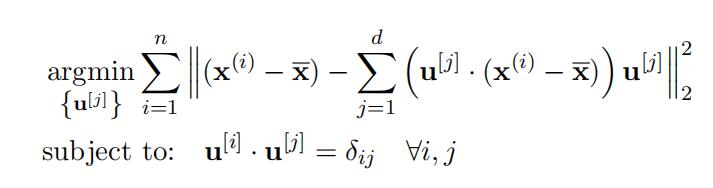

However there are several other approaches to PCA. One is the ‘Reconstruction Error Minimisation’ approach.

Here we are interested in finding the d-dimensional subspace spanned by .u[j]Σd

which minimises the reconstruction error, i.e.:(2)

(a) [6 marks]

Show that problems (1) and (2) are equivalent.

(b) [4 marks]

In each analysis we were careful to centre our input data by effectively subtracting off the mean. Why is it important to centre the data in this way?Engineering Sciences代写

(c) [6 marks]

Show that we can re-write the objective of problem (1) as follows, and provide an expression for the matrix S:

(3)

(3)

(d) [4 marks]

If we were to replace S with the sample correlation matrix and then proceed to perform PCA with this objective, under what circumstances would this form of PCA differ from the covariance matrix version?

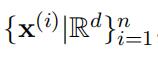

- Assume a set of unlabelledpoints,

, which we wish to separate into k clusters.Engineering Sciences代写

, which we wish to separate into k clusters.Engineering Sciences代写

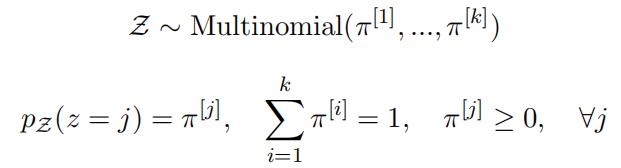

We wish to use a ‘Spherical’ Gaussian Mixture Model (GMM) for this clustering task. Here we assume that each point has an unknown, latent, cluster assignment associated with it, z ∈ {1, …, k}, which is the outcome of a multinomial random variable, Z:

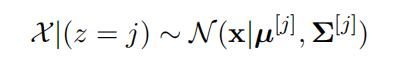

Furthermore, contingent on the cluster assignment, z, we assume that each point, x, is the outcome of a Gaussian random variable, X :

Where:

µ[j] ∈ Rd is the mean associated with cluster j, and;

Σ[j] = sI is the covariance associated with each cluster j. Here s is a constant, and I denotes the d × d identity matrix.

(a) [6 marks]

Give an expression for the log likelihood of the dataset, {x(i)}n

in terms of s and{µ[j], π[j]}k=1.Engineering Sciences代写

(b) [5 marks]

We wish to maximise this expression subject to the usual constraints using the EM algorithm.

Give an expression for the responsibilities, γi[j], in this case.

(c) [8 marks]

Explain why, as s → 0, the solution we generate from the spherical GMM EM clustering algorithm will tend towards the solution we would generate from the k-means clustering algorithm.Engineering Sciences代写

(d) [3 marks]

Describe and explain the form of the boundary which discriminates between clusters in this case.

(e) [3 marks]

Now consider the more usual GMM EM clustering algorithm, in which we assume that the covariances, Σ[j] > 0, are no longer constrained to take the isotropic form which we assumed earlier and are in general distinct ∀j.

Describe and explain the form of the boundary which discriminates between clusters in this case in general.

- (a) [4marks]

Explain the importance of the Representer Theorem?

(b) [3 marks]

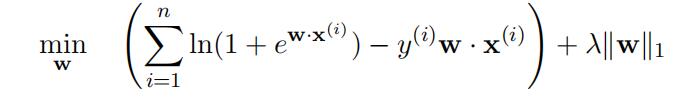

Consider the A1-regularised Logistic regression optimisation problem:

Here:

represents a set of training data, where x ∈ Rm are input attributes,

while y ∈ {0, 1} is the output label;

w ∈ Rm is the weight vector of the linear discriminant which we seek, and;

λ > 0 is some constant.

Is this problem susceptible to the kernel trick? Explain.

(c) [4 marks]

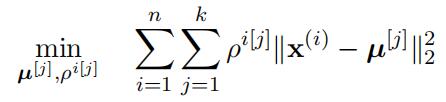

Consider the following clustering problem:

Here:

n i=1represents a set of unlabelled points, where x ∈ Rm are input attributes;

{µ[j] ∈ Rm}krepresent the k cluster centroids, and;

{ρi[j] ∈ {0, 1}}n,k represent a set of assignment variables.

Let us seek to perform this optimisation using the k-means algorithm.

Is the M-step of this procedure susceptible to the kernel trick? Explain.

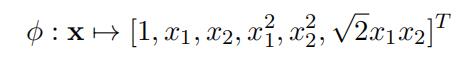

(d) [4 marks]

Assuming 2-dimensional input attributes, x = [x1, x2]T , what kernel function, κ, is associated with the following feature map, φ (express your answer in terms of vector dot products):

(e) [5 marks]

Assuming 1-dimensional input attributes, x, what feature map, φ : x ›→ φ(x), is associated with the following kernel, κ:

κ(x(i), x(j)) = exp .−σ2“x(i) − x(j)“2Σ

更多其他:C++代写 java代写 r代写 代码代写 金融代写 物理代写 考试助攻 C语言代写 finance代写 code代写 lab代写 计算机代写 data代写