Robot Vision [06-25024]

Summative Assignment 2

代写机器人视觉 Store all the cross-entropy losses, accuracies, and the corresponding model names in a table. Sort the table by the losses in ascending order.

Submission deadline: 12:00pm (BST), Monday, 16 May 2022

Instructor: Dr. Hyung Jin Chang Prof. Hamid Deghahni Dr. Jianbo Jiao

Total marks: 100

Contribution to overall module mark: 25%

Submission Method: This assignment must be submitted through Canvas.

Important note on plagiarism 代写机器人视觉

for further information. Plagiarism includes failure to provide proper attribution for ideas originating from external sources, and copying from other students or external sources. Changing the wording

of copied text is still considered plagiarism. It is acceptable, and even encouraged, to discuss course content and assignments with your fellow students. However, you must write your answers to all assignments individually and you must not share those answers with other students.

Instructions (Please read carefully!)

This assessment is summative and contains two parts. In Part 1, you will carry out object/scene reconstruction with Structure from Motion. In Part 2, you will use MatConvNet (https://www. vlfeat.org/matconvnet/) to perform image classification task with a model trained by yourself, a pre-trained model, and a fine-tuned model.

Your answer must be submitted to Canvas before the deadline in the form of a single zip archive file containing: 代写机器人视觉

- Your answers to the questions in prose and diagrams. This should take the form of a single PDF document with the answers for each question using the provided LaTeX

- Your code and any accompanying files necessary to execute the code for any programming questions as specified in the LaTeX

and a separate PDF document with the answers for Turnitin checking (two files in total; one zip file and one PDF file). Some or all of the text of each question is emphasised using italics. This emphasis indicates a question that must be explicitly answered or a task that must be completed.

Part 1 代写机器人视觉

Question 1.1 [8 marks] Calibration patterns with landmarks at known locations are often used for calibration of camera intrinsic and extrinsic properties. List the desirable properties of those calibration patterns and explain why they are important.

Question 1.2 [8 marks] There are various algorithms which detect different kinds of features. An image of the School of Computer Science of UoB is provided. Plot on a 2 3 sub-plotted figure, the 100 strongest features when using the Minimum Eigenvalue, SURF, KAZE, FAST, ORB and the Harris-Stephens algorithms. Include this sub-figure in your report and ensure that when the MATLAB file executes, it appears as a sub-figure. Save and include the MATLAB sub-figure in your submission.

Summary of what needs to be answered:

- Code for part 2 (username assignment2 part1 Q12.m)

- The generated sub-plotted figure (report inPDF)

- The generated sub-plotted figure (.figfile)

- All images needed for the code tofunction

Question 1.3 [15 marks]

It is required to submit your code as username assignment2 part1 Q1 3.m.

In the absence of the source code this part will not be graded.

In this part you will work with SURF1 what is a patented local feature detector and descrip- tor. It can be used for tasks such as object recognition, image registration, classification or 3D reconstruction. It is partly inspired by the SIFT descriptor. The standard version of SURF is several times faster than SIFT and it can be more robust against different image transformations than SIFT. For this task you should use the detectSURFFeatures MAT- LAB function. 代写机器人视觉

Use SURF features to align B.jpg and C.jpg images to A.jpg. Find matching features and estimate the transformation between the images. Apply the calculated transformation on the images to generate the recovered images (expected results are shown below). Save and submit the recovered images as username assignment2 part1 AB.png and username assignment2 part1 AC.png.

(a) Expected results of Question 1.3

Part 2 代写机器人视觉

Question 2.1 [15 marks]

Carry out a classification task for a subset of

data using several pre-trained models

provided by MatConvNet, then calculate their performance in terms of average cross-entropy loss and accuracy. Decide which model has the best performance base on the cross-entropy loss, and find the worst classified category by the best model. Follow the instructions below to complete this task.

Submit your code as username assignment2 part2 Q2 1.m.

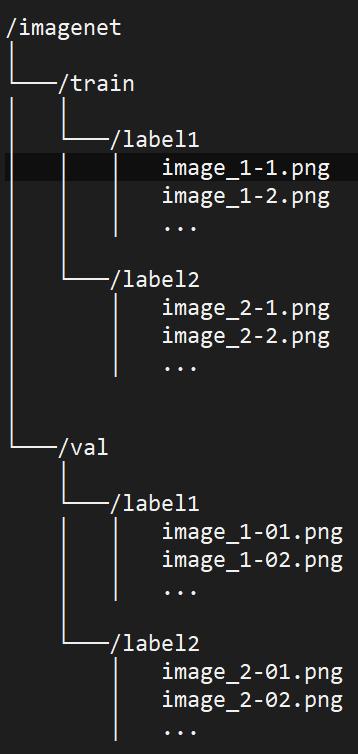

1.Loadtheimages by unzipping zip. The training data and validation data is stored in /train and /val folders. In total, there are 1100 images and 10 categories

1Bay, Herbert, Tinne Tuytelaars, and Luc Van Gool. “Surf: Speeded up robust features.” In ECCV, pp. 404-417.

Springer, Berlin, Heidelberg, 2006.

in this dataset. In the training dataset, there are 100 images in each category, whereas there are 10 images per category in the validation dataset. The category of each image is given by its folder name. Simplified folder structure is shown infigure1.

Figure 1: Simplified file structure of imagenet.zip.

-

In this classification task, you only need to classify the images in the validation set.

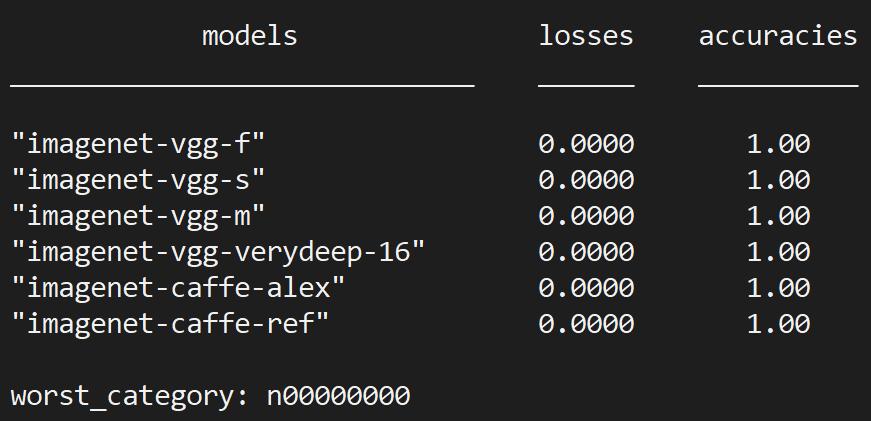

- Download the pre-trained models fromMatConvNet. You will need to carry out the classification task with all the models listed below:

∗ imagenet-vgg-f

∗ imagenet-vgg-m

∗ imagenet-vgg-s

∗ imagenet-vgg-verydeep-16

∗ imagenet-caffe-ref

∗ imagenet-caffe-alex

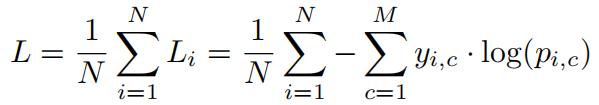

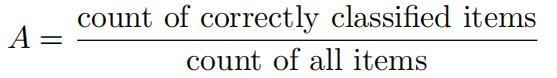

- The formula of the average cross-entropy loss is givenby

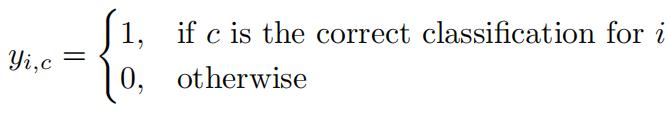

where

i is image, N is the number of images, c is category, M is the number of categories, p

is the predicted probability i is of c.

The accuracy is given by

- Store all the cross-entropy losses, accuracies, and the corresponding model names in a table. Sort the table by the losses in ascending order. Besides, use another variable to store the worst classified category. Save and submit them into a .MAT file named output 2-1.mat. An example of the output is shown in figure 2.

Figure 2: Example output of question 2.1.

Question 2.2 [18 marks]

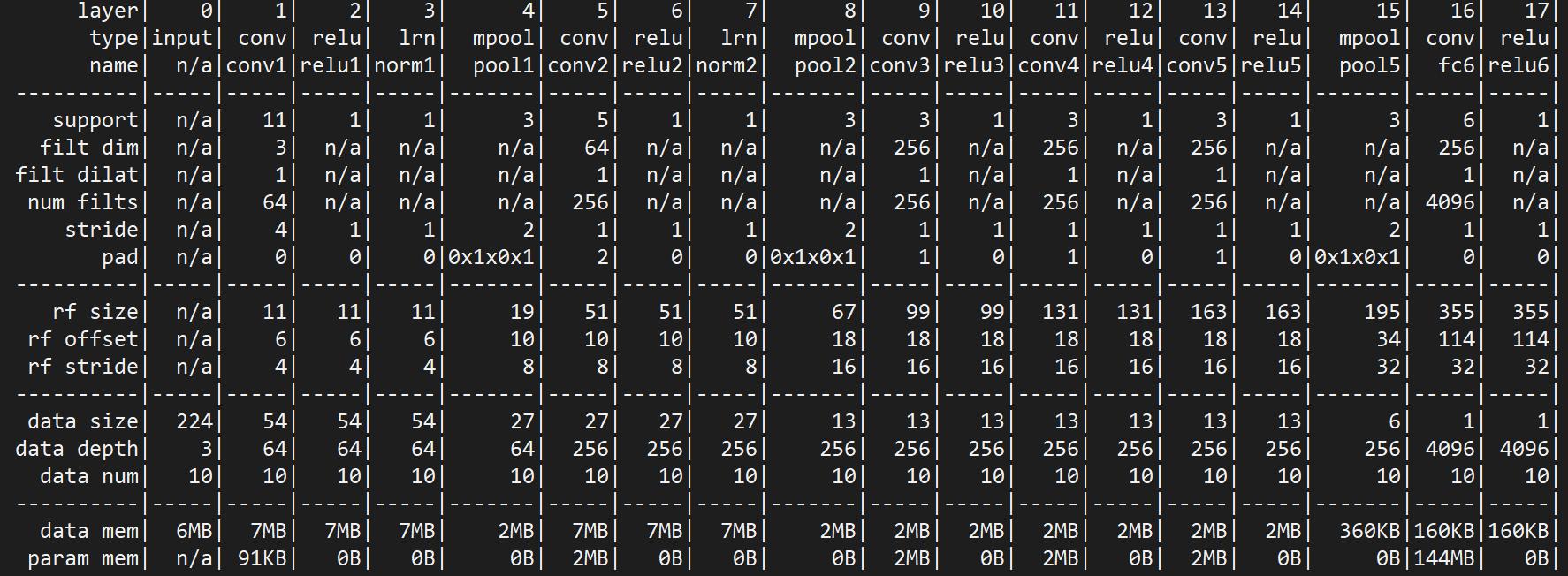

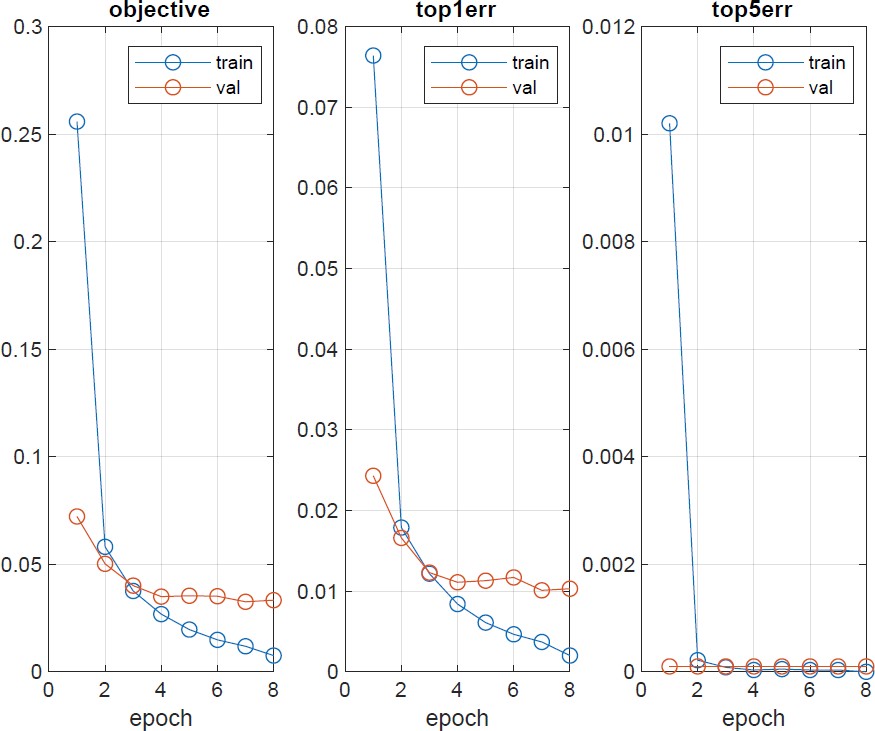

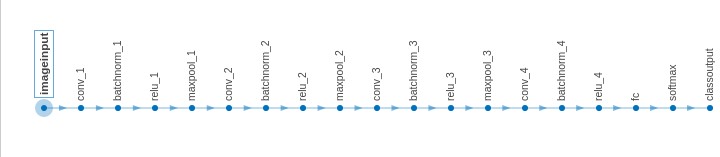

Perform transfer learning using imagenet-vgg-f model with the Im- agenNet data. Design appropriate IMDB structure to feed the data to the model. Adapt the model to classify only the 10 categories from the dataset. Retrain the model for at least 10 epochs, and show the learning curves. Visualise the learned filter of the network. Follow the instructions below to complete this task. 代写机器人视觉

Submit your code as username assignment2 part2 Q2 2.m.

- Usethe images in /imagenet/train as training set and use the images in /imagenet/val as validation Before feeding them to the model, subtract the images by the mean value to make the images zero-centred.

- Do NOT load all images into memory at once. Although you probably can do this without crushing your device in this task, in real case, you will have to deal with considerable amount of images, and it is nearly impossible that your memory can fit. Therefore, you should make use of the getBatch() function to load the images only when they are

- Whenadapting the model, perform the following modifications to your network:

(a)Inhibit the first fully-connected (FC) layer from learning.

(b)Replace the last two layers with a FC layer and a softmaxloss layer. Modify the number of output to classify only 10 categories. Randomly assign the initial weights of the new layers. 代写机器人视觉

(c)Inserta dropout layer just before the last FC layer, set the rate to 5.

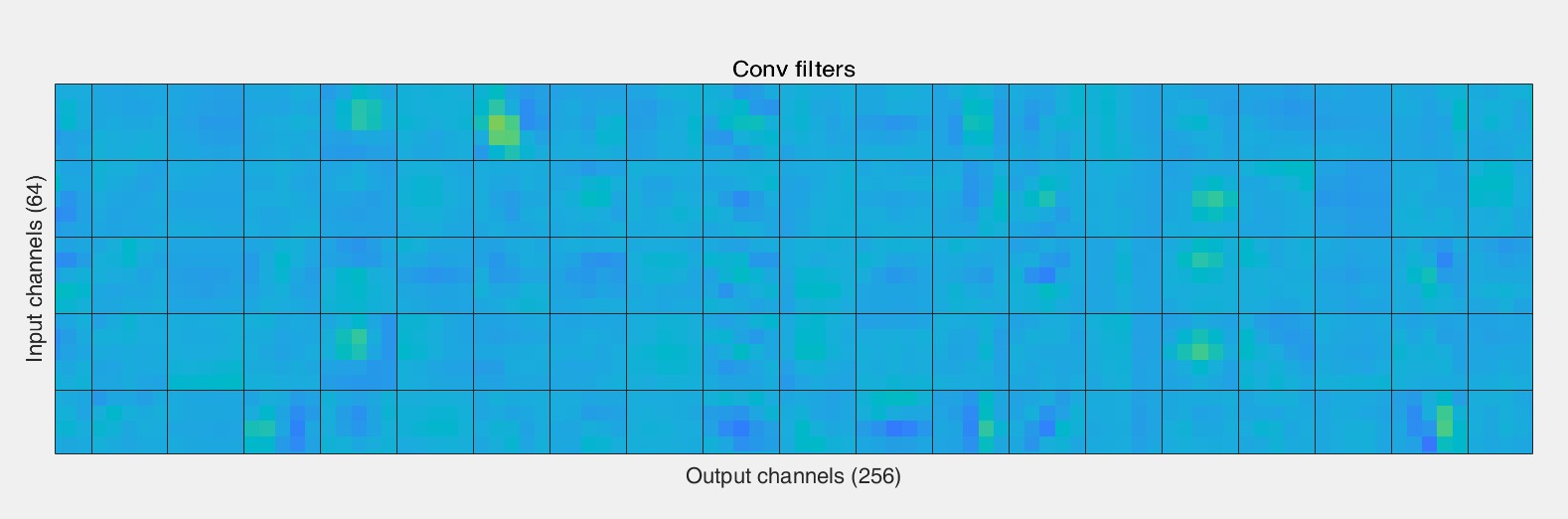

4.Visualise the weights of the first learned filter using vltshow().

5.Save and submit the trained model in a .MAT file named output 2-2.mat. The model should satisfied all the requirements listed in this question, an example of model is shown in figure 3.

Figure 3: Example architecture of fine-tuned model.

- Saveand submit the image of the learning curve and the learned filter as output 2-2-1.png and output 2-2-2.png, respectively. Example images are shown in figure 4 and figure 5, respectively.

Figure 4: Example learning curves.

Figure 5: Example learned filter.

Question 2.3 [6 marks] 代写机器人视觉

Use the trained model in Question 2.2 to carry out the same classification task as described in Question 2.1. Remove some layers for training purpose before using it. Calculate the loss and accuracy for the trained model. Follow the instructions below to complete this task.

Submit your code as username assignment2 part2 Q2 3.m.

- Load the network trained from the Question 2.2, remove the dropout layer, andreplace the softmaxloss layer with softmax

- Carry out the classification task using only the validation set as Question1.

- Saveand submit the calculated loss and accuracy in a .MAT file named output 2-3.mat.

Question 2.4 [12 marks]

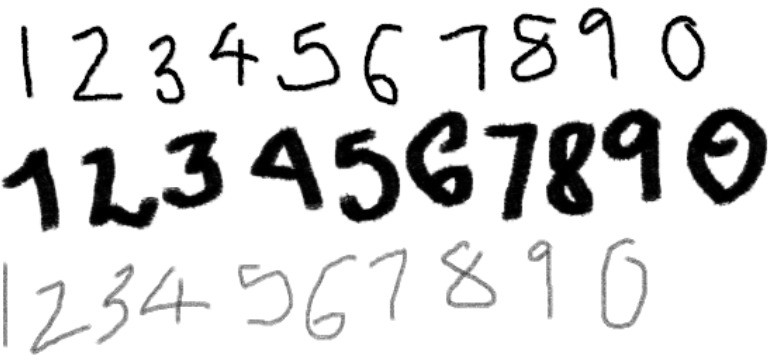

Train a network using the MNIST handwritten digit database2 for classification. The task can be solved using a CPU only execution environment.

Submit your code as username assignment2 part2 Q2 4.m.

- Show 30 random example images of MNIST in a 5*6 subplot. Count the number of labels per each category and include the result into your report. Use 80:20 train-test ratio.

- Augment the training data using the imageDataAugmenter function with random ro- tation [-30, 20], using random reflection along the top-bottom direction and in the left-right direction, as well as using random X translation [-2, 4] and Y translation [-3, 2]. Show example images of the augmented data and include it into your report.

2 https://en.wikipedia.org/wiki/MNIST_database

3.Implement the network architecture shown in Figure 6.The input image size is 28*28, pool size and stride are 2. Use Adam optimizer, lr = 0.02, validation frequency 50, validation patience 20, and shuffle the training data before each training epoch (train the network for 35 epochs). Plot the training progress and include it into your written report. Save the model and submit it with your report. What is the validation accuracy of yourmodel? 代写机器人视觉

4.Create your own test data (3 sets of digits handwritten by you, total 30 images, try to makethem look as different as You are free to use any device or software (e.g. Microsoft paint) to create the test data.), include it into your report as in Figure 7 and report the overall and per category accuracy of your model on the test images. The test images must be submitted with your code, otherwise this part of the task will not be graded. Analyse your network with the built-in analyzeNetwork MATLAB function and include the analysis results as a figure into your report.

Figure 6: Network architecture

Figure 7: Handwritten digits

Question 2.5 [18 marks]

You are provided with a code base of training a MNIST classifier (SA cnn mnist experiments.m). However, the code has some problems/bugs so it cannot be correctly run. Identify those problems and get the model run correctly. Train the model so that the top-1 error on the validation (val ) set is less than 0.1.

- List the problems that prevent the code from running correctly

- Show the final top-1 error on the val set (there is a pre-defined function to show the result in aplot) 代写机器人视觉

- Submitall the corrected codes as one zip file (username assignment2 part2 Q2 zip) that leads to the above results.

Note that you are not allowed to change the number of epochs (i.e. total training time) and the main architecture of the model (e.g. the depth of the deep network).