Homework 6

Numerical Computing, Spring 2022

代写数值计算作业 Explain carefully how the plot demonstrates what we discussed about the convergence of these 3 methods in class.

Be sure to read my Notes on Eigenvalues and Singular Values before at- tempting this homework. You may find it helpful to experiment with Matlab while doing the homework, but be sure to explain why statements are true, not just: “Matlab says so”. As before, if you are stuck on some questions, consult with other students or the web, remembering to acknowledge any help you get, or come to my office hours or send email to make an appointment with me.

1.Prove the following statements, using the basic definition of eigenvalues and eigenvectors, orgive a counterexample showing the statement is not true. Assume A is an n × n real

(a)If λ is an eigenvalue of A and α ∈ R, then λ + α is an eigenvalueof A + αI, where I is the identity matrix.

(b)If λ is an eigenvalue of A and α ∈ R, then αλ is an eigenvalue of α A.

(c)If λ is an eigenvalue of A, then for any positive integer k, λkis an eigenvalue of Ak. (Here Ak means the matrix product AA · · A, where A appears in the product k times.) 代写数值计算作业

(d)If B is “similar” to A, which means that there is a nonsingular matrix S such that B = SAS−1, then if λ is an eigenvalue of A it is also an eigenvalue of B.

(e)Every matrix has at least two distinct eigenvalues, say λ and µ,with λ ƒ= µ.

(f)Every real matrix has a real eigenvalue.

(g)If A is singular, then it has an eigenvalue equal to zero.

(h)If A is singular, then it has a singular value equal to zero.

(i)If all the eigenvalues of a matrix A are zero, then A =0.

(j)If all the singular values of a matrix A are zero, then A =0.

2.Suppose A is a real square n × n matrix with SVD given by A = U ΣV T. 代写数值计算作业

(a)What are the eigenvalues and eigenvectors of the real symmetric ma- trix ATA in terms of Σ, U and V ?

(b)What are the eigenvalues and eigenvectors of the real symmetric ma- trix AATin terms of Σ, U and V ?

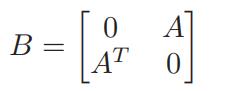

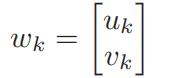

3.Suppose A is a real square n × n matrix with SVD given by A = U ΣV T. Consider the matrix

and the vector

where uk is the kth column of U and vk is the kth column of V . Show that, for k = 1, . . . n, the vector wk is an eigenvector of B: what is the corresponding eigenvalue? Also show that the vectors wk, k = 1, . . . n, are mutually orthogonal, and that you can make them orthonormal by scaling them appropriately.

4.(This is a continuation of the previous question.) The matrix B is sym- metricand has dimension 2n × 2n, so it must have 2n orthonormal eigen- vectors. The scaled vectors wk, k = 1, . . . , n account for n of Find n more eigenvectors of B along with their corresponding eigenvalues, and, by scaling them appropriately, show that all 2n eigenvectors are mutually orthonormal.

5.Confirmthe plot at the top of 4 of the notes on eigenvalues and singular values as follows.

Using cos, sin and linspace, generate lots of equally spaced points (vectors in R2) on the unit circle in two dimensions and plot them to confirm you generated them correctly, but use axis equal be- cause otherwise the circle will look like an ellipse. Then, using a randomly generated 2 × 2 matrix A, multiply A onto these vectors and plot the re- sulting vectors; this time, they should trace out an ellipse — continue to use axis equal. Use as many vectors as you need to make a nice picture, and try enough choices of A so that you can choose one where the ellipse doesn’t look too much like a circle. Also, mark v1, v2 on the circle plot and σ1u1, σ2u2 on the ellipse plot as shown on p. 4 (compute these first from svd).

6.Recall that your function polyInterpOrApprox in Homework 3 generates polynomial approximation to data using least squares. 代写数值计算作业

Modify the func- tion to accept a 5th argument, wantPlot, which we can set to 0 if wedon’t want a plot to be generated, and a 6th argument alg, which spec- ifies which of the following algorithms to use to solve for the coefficients x solving the least squares problem, where A is the Vandermonde matrix, as follows:

1.x=A\b, as in Homework3

2.the normal equations, implemented by x =(A’*A)\(A’*b)

3.the normal equations, but by first explicitly computing theCholesky factorization of AT A using Matlab’s chol, and then doing forward and back substitution using \ (see A&G, p. 178)

4.usingthe reduced QR factorization, by calling A&G’s house and lsol (this does not compute Qˆ explicitly, but solves Rˆx = QˆT b, obtaining QˆT b by multiplying out the Householder transformations onto b (see also my QR notes, p. 5)

5.using the reduced QR factorization, using Matlab’s qr (this does compute Qˆ explicitly), and then solving Rˆx = QˆT b 代写数值计算作业

6.usingMatlab’s svd (as explained in my SVD notes, p. 8; see also A&G, p. 203, but in this application the least squares problem is full rank, so you can take r = n, and there is no issue of looking for a minimum norm solution: the solution is unique). When this algorithm is selected, polyInterpOrApprox should also return the condition number κ(A) = σ1/σn. This can be returned as a 4th output argument condA. The other five methods can return nan for this output argument.

Without plotting the polynomials, test your code on this data (which you also used in Homework 3), and make sure all 6 algorithms give approxi- mately the same answer x before continuing to the next question. (If not, you have a bug that you need to track down first.)

7.(This is a continuation of the previous question.)

Set the input parameter t to be the vector of 101 equally spaced points from 0 to 1 and set the data vector bto the function cos(4t) evaluated at these points, which you can compute by b = cos(4*t). Set the degree input parameter deg to 8, in order to compute approximations to the given data by a polynomial of degree Without plotting the polynomials, print the vector of coefficients x showing 16 digits for each of the 6 choices of alg in a table. They won’t all be exactly the same. Which do you think are most accurate and why? 代写数值计算作业

8.(This is a continuation of the previous question.) What happens if you increase the degree deg? Does the difference between the x solutions become more significant? Is this related to the condition number of A computed by the SVDalgorithm? If you turn the plotting on and plot the approximating polynomial corresponding to x, can you detect any differences just looking at the plots, for sufficiently large degree? Use the “zoom” tool to get a close-up view, particularly near the end points t = 0 and t = If you continue increasing the degree deg sufficiently, one of the six algorithms may generate an error return. Which one is it and why?

9.(20 points) Implement the (normalized) power method (AG 289), in- verseiteration (AG p. 294) and the Rayleigh quotient iteration (AG p. 295). 代写数值计算作业

The first two use the notation λ(k) for the Rayleigh quotient vT Avk and the third uses the notation µk; let’s call all these µk for simplicity. In inverse iteration, for efficiency you need to do the LU factorization of the matrix A−αI outside the loop and then use forward and back substitution (using \ is fine) inside the loop. This is not possible for the Rayleigh quo- tient iteration because the matrix depends on the iteration counter k. In

all cases compute and display the eigenvalue residual norm “Avk − µkvk” at the end of the loop and terminate when either the norm of the residual is less than 10−14 or the iteration counter k reaches 100. Also print k, µk and the norm of the residual at the end of the loop using fprintf. 代写数值计算作业

Run all 3 iterations on the tridiagonal matrix T computed from calling tridiagExample.m, with inputs N=100 and nonsym=0.1. Set v0 to the vector of all ones. For inverse iteration, use α = 4. Using semilogy, plot the residual norms of all 3 methods on the same plot. Explain carefully how the plot demonstrates what we discussed about the convergence of these 3 methods in class. Note that the Rayleigh quotient iteration does not find the largest eigenvalue of T , unlike the other 2 methods.