Introduction to AI – Probabilistic Reasoning

人工智能代考 You can read our slides (annotated or plain), read the class online textbook, and use a calculator on your mobile devices or computers.

Final Exam 85

This exam is open book, open notes. You can read our slides (annotated or plain), read the class online textbook, and use a calculator on your mobile devices or computers. You are not allowed to collaborate with anyone from our class or online, nor seek help on social media. No collaboration is allowed.

By signing your name below, you are agreeing that you will never discuss or share any part of this exam with anyone who is not currently taking the exam or is not a member of the course staff. This includes posting any information about this exam on wechat or any other social media. Discussing or sharing any aspect of this exam with anyone outside of this room constitutes a violation of the academic integrity policy of the summer program. 人工智能代考

To express your understanding with the above policy, please write the phrase below in the box, and then sign your name below:

“I excel with integrity”

Signature:

Name (print):

Student ID:

1. Probabilistic Reasoning and Noisy-OR [12 points]

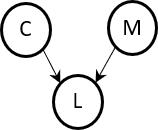

Suppose that each morning there are two events that might make me late for my class: car trouble and my children having a meltdown. Let L ∈ {0,1} represent whether or not I have car trouble, C ∈ {0,1} represent whether or not my kids have a meltdown and L ∈ {0,1} represent whether or not I am late for class. The Bayes Net to model this situation is shown below:

(a)(2 pts) The influence of C and M on L is modeled with a noisy-OR relationship. Fill in the missing values in the noisy-OR conditional probability table for this network. You do NOT need to show your work for part(a).

| C | M | P(L = 1|C, M) |

| 0 | 0 | |

| 0 | 1 | 0.2 |

| 1 | 0 | |

| 1 | 1 | 0.44 |

(b)(4 pts) Order the following values from LEAST to GREATEST going down the page. If two or more values are the same, write them next to each other on the same line. You do not have touse all four lines.

(c) (6 pts) Let P(M = 1) = 0.05 and P(C = 1) = 0.01. Compute the value for

P(M = 1|C = 1, L = 1) and P(M = 1|C = 0, L = 1) You do not have to do the final arithmetic steps. You may leave your answers in terms of arithmetic operations on numerical values. SHOW YOUR WORK FOR PART (c).

2. Conditional Independence [11 points] 人工智能代考

(a)(3 pts) Consider the followingequality:

𝑃(𝑄, 𝑅|𝑆, 𝑇, 𝐻,𝐼) = 𝑃(𝑄|𝑆,𝐼)𝑃(𝑅|𝑄, 𝑆, 𝐻,𝐼)

Which of the following statements is/are implied by this equality? Write the letters of all (zero or more) that are implied in the blank. If none are implied write “None”.

A.Q is conditionally independent from H given {S,I}

B.R is conditionally independent from T given {Q, S, H,I}

C.S is conditionally independent from T and H given{I}

Answer:

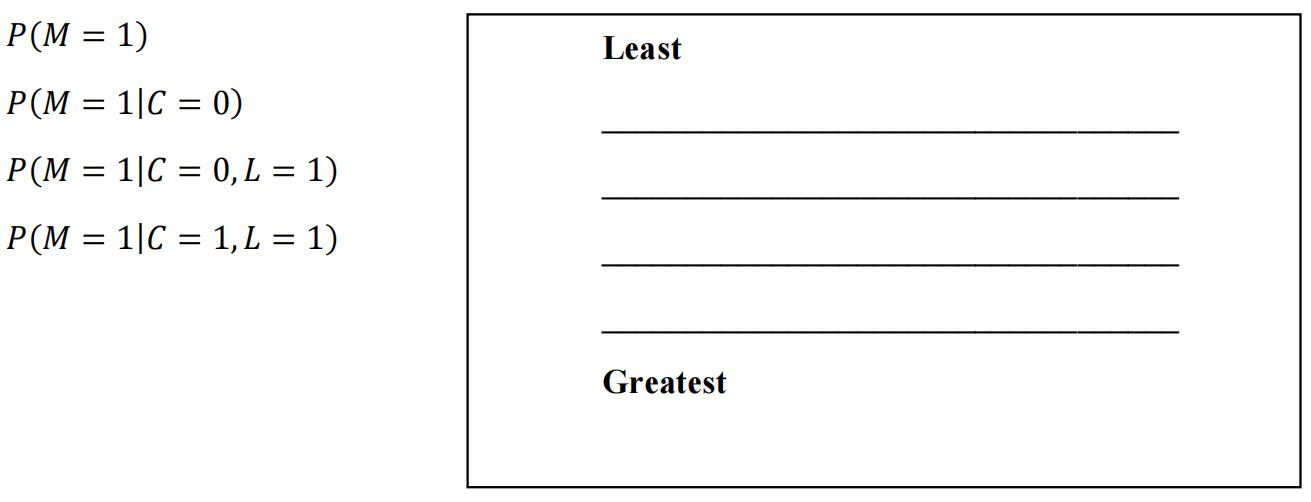

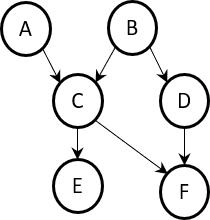

(b)(5 pts) With respect to the BN graph below, state whether each of the statements of conditional independence are true or false.

(i) 𝑃(𝐴, 𝐻|𝐶, 𝐸) = 𝑃(𝐴|𝐶, 𝐸)𝑃(𝐻|𝐶, 𝐸)

(ii) 𝑃(𝐴|𝐵) = 𝑃(𝐴)

(iii) 𝑃(𝐴,𝐷|𝐻) = 𝑃(𝐴|𝐻)𝑃(𝐷|𝐻)

(iv) 𝑃(𝐸|𝐶, 𝐵,𝐷, 𝐺) = 𝑃(𝐸|𝐶, 𝐵)

(v) 𝑃(𝐺, 𝐻|𝐶, 𝐸, 𝐴) = 𝑃(𝐺|𝐶, 𝐴)𝑃(𝐻|𝐶, 𝐸, 𝐴)

(c)(3 pts) Prove that 𝑃(𝐻|𝐶,𝐷) ≠ 𝑃(𝐻|𝐶) usingd-separation.

3. General Inference in Bayesian Networks [10 points] 人工智能代考

Consider the Bayesian Network given below where all variables are binary.

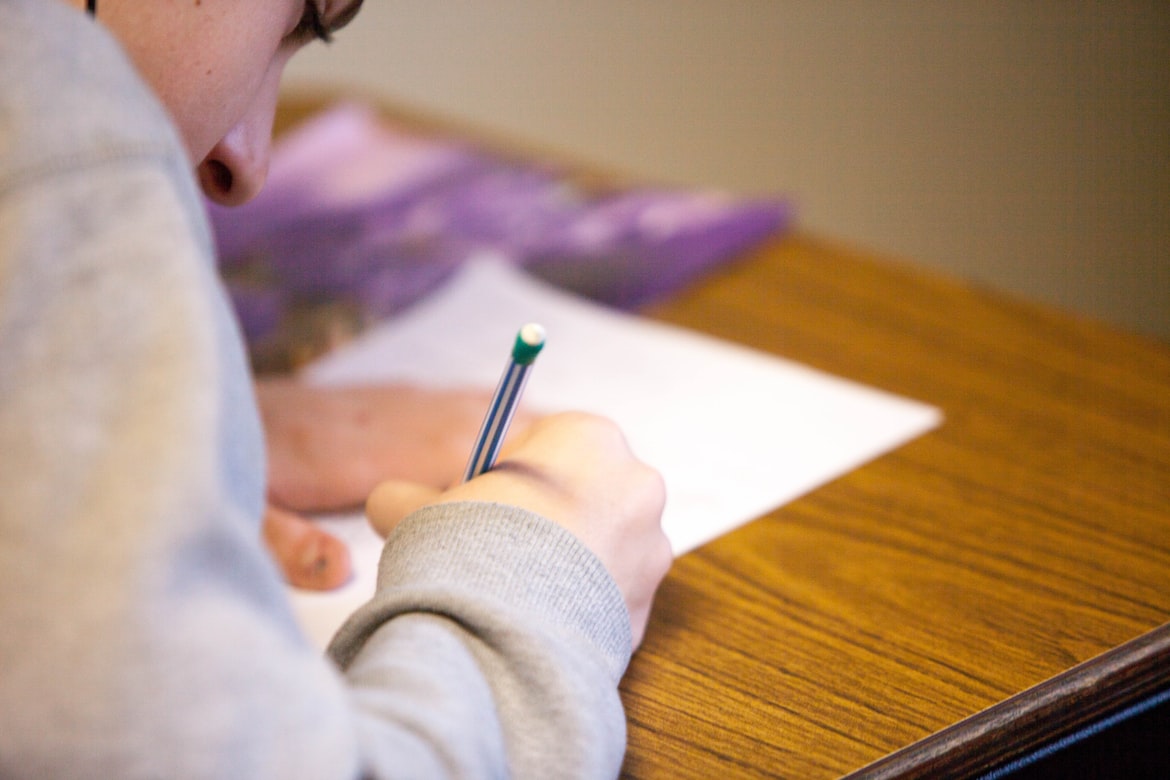

(a)(2 pts) Using the product rule and marginalization, the posteriorprobability 𝑃(𝐶 = 1|𝐹 = 0, 𝐸 = 1) can be expressed as:

Express the joint probability 𝑃(𝐶 = 𝑐, 𝐹 = 0,𝐸 = 1) in terms of only the probabilities given in the conditional probability tables in the Bayesian Network.

(b)(6 pts) Fill in the table with the number of additions and multiplications it takes tocompute 𝑃(𝐶 = 1, 𝐹 = 0, 𝐸 = 1), 𝑃(𝐶 = 0, 𝐹 = 0, 𝐸 = 1), and then the total number to compute 𝑃(𝐶 = 1|𝐹 = 0, 𝐸 = 1) using the brute force (enumeration) expression you wrote in part (a). 人工智能代考

| Phase of algorithm | # multiplications | # additions | # divisions |

| Compute 𝑃(𝐶 = 1, 𝐹 = 0, 𝐸 = 1) | 0 | ||

| Compute 𝑃(𝐶 = 0, 𝐹 = 0, 𝐸 = 1) | 0 | ||

| Combine above values to calculate 𝑃(𝐶 = 1|𝐹 = 0, 𝐸 = 1) | 0 | 1 | 1 |

| Total | 1 |

(c)(2 pts) Does the Variable Elimination algorithm using the elimination order E, F, A, D, Buse fewer arithmetic operations than the brute-force (enumeration) approach to compute 𝑃(𝐶 = 1|𝐹 = 0, 𝐸 = 1) in this network? Briefly explain why or why not.

4. Maximum Likelihood Learning [20 points]

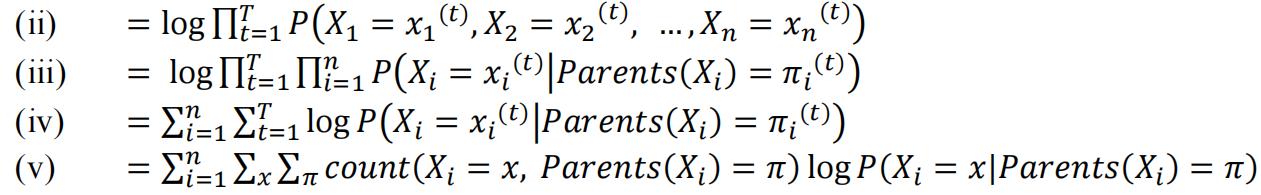

(a)(6 pts) Recall the derivation of the log-likelihood for a Bayesian Network that we did in class, shown below. Briefly justify each step.

(i) ℒ = log log 𝑃(𝑑𝑎𝑡𝑎)

| Step | Justification |

| (i) | |

| (ii) | |

| (iii) | Move log in and swap sums |

| (iv) |

(b)Suppose that A, B, and C are binary random variables and that we observe T = 1000 samples from their joint distribution 𝑃(𝐴, 𝐵, 𝐶) with the followingfrequencies: 人工智能代考

| A | B | C | count(A, B, C) |

| 0 | 0 | 0 | 100 |

| 0 | 0 | 1 | 50 |

| 0 | 1 | 0 | 200 |

| 0 | 1 | 1 | 150 |

| 1 | 0 | 0 | 50 |

| 1 | 0 | 1 | 100 |

| 1 | 1 | 0 | 150 |

| 1 | 1 | 1 | 200 |

(i)(3 pts) From the data in the table above, calculate the Maximum Likelihood(ML) estimates for the following

𝑃𝑀𝐿(𝐴 = 1), 𝑃𝑀𝐿(𝐵 = 1|𝐴 = 0), 𝑃𝑀𝐿(𝐵 = 1|𝐴 = 1)

𝑃𝑀𝐿(𝐴 = 1) =_________________________

𝑃𝑀𝐿(𝐵 = 1|𝐴 = 0) =_______________________

𝑃𝑀𝐿(𝐵 = 1|𝐴 = 1) = ___________________________

(ii)(2 pt) From your answers to part (i), does the data support the claim that A and B are marginally independent? Briefly justify your answer.

(iii)(4 pts) From the data in the table above, calculate the Maximum Likelihood(ML) estimates for the following 人工智能代考

𝑃𝑀𝐿(𝐶 = 1|𝐴 = 0, 𝐵 = 0), 𝑃𝑀𝐿(𝐶 = 1|𝐴 = 0,𝐵 = 1), 𝑃𝑀𝐿(𝐶 = 1|𝐴 = 1, 𝐵 = 0),

𝑃𝑀𝐿(𝐶 = 1|𝐴 = 1, 𝐵 = 1)

𝑃𝑀𝐿(𝐶 = 1|𝐴 = 0, 𝐵 = 0) =__________________

𝑃𝑀𝐿(𝐶 = 1|𝐴 = 0, 𝐵 = 1) =__________________

𝑃𝑀𝐿(𝐶 = 1|𝐴 = 1, 𝐵 = 0) =__________________

𝑃𝑀𝐿(𝐶 = 1|𝐴 = 1, 𝐵 = 1) =___________________

(iv)(1 pt) From your answer to part (iv), does the data support the claim that C is conditionally independent from B given A? Briefly justify your answer.

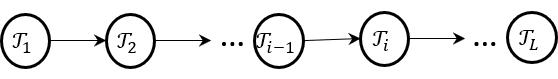

(v)(4 pts) Finally, consider a first-order (bigram) Markov Model defined over the 3-letter alphabet А = {x, y, z}. Let τi∈A denote the ith token in a sequence. The model is shown below:

List all of the parameters of this model (i.e. the parameters whose value you need to specify in order to use the model). You can use a shorthand notation as long as it’s clear what all the parameters are.

5. Implementing Hangman [6 points] 人工智能代考

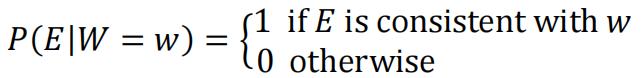

Recall from your implementation of the Hangman game in HW2 that the probability of evidence E given word w is:

where E contains the letters guessed so far along with the places (if any) they appear, and W represents a word in the dictionary. E is consistent with w if all the letters in E that have places listed appear in w in those places, and all the letters in E that do not have places listed do not appear in w.

Assume that E is represented as a map named evidence where the keys are letters that have been guessed and the values are lists of integers (which may be empty) containing all the places that the letter appears. For example, for the following state of the game:

Correctly guessed: D – – I D

Incorrectly guessed: {O, M}

evidence[‘D’] is [1, 5],

evidence[‘I’] is [4],

evidence[‘O’] and evidence[‘M’] are [], and no other keys appear in the map. 人工智能代考

Complete the pseudocode to compute and return𝑃(𝐸|𝑊 = 𝑤). You do NOT have to use correct syntax. Precise English statements are acceptable.

evidenceGivenWord(evidence, w):

for each letter which is a key in evidence:

let places = evidence[letter] // places is a list

6. EM Algorithm (18 pts)

For all parts of this problem except part (d) show your work, justify each step, and simplify your answers as much as possible. In part (d) simply write your final simplified answer.

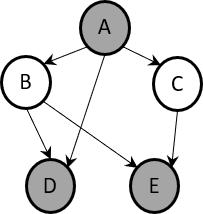

Consider the belief network shown below, with CPTs for CPTs for 𝑃(𝐴), 𝑃(𝐵|𝐴), 𝑃(𝐶|𝐴), 𝑃(𝐷|𝐴, 𝐵), 𝑃(𝐸|𝐵, 𝐶).Suppose the values of the nodes B and C are unobserved (hidden), while the values of the shaded nodes A, D, and E are observed.

(a) Posterior probability (4 pts)

Compute the posterior probability 𝑃(𝐵, 𝐶|𝐴,𝐷, 𝐸) over the hidden nodes in terms of the CPTs of this belief network. Show your work, justify each step, and simplify your answer as much as possible.

(b) Posterior probability (4 pts)

Compute the posterior probabilities 𝑃(𝐵|𝐴,𝐷, 𝐸) and 𝑃(𝐶|𝐴,𝐷, 𝐸) in terms of the posterior probability 𝑃(𝐵, 𝐶|𝐴,𝐷, 𝐸) from part (a). Show your work, justify each step and simplify your answer as much as possible.

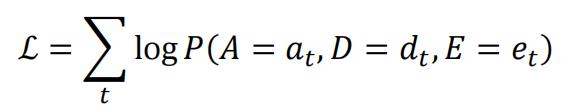

(c) Log-likelihood (4pts)

Consider a data set of T partially labeled examples ![]() over the nodes A, D and E of the

over the nodes A, D and E of the

network. The log-likelihood of the data set is given by:

Compute this log-likelihood in terms of the CPTs of the belief network above. Show your work, justify each step and simplify your answer as much as possible.

(d) EM Algorithm (6 pts)

Consider the EM algorithm that updates the CPTs to maximize the log-likelihood of the data set in part (c). Complete the numerator and denominator in the expressions for the EM updates shown below.

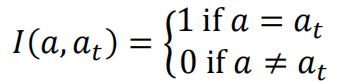

Suggested notation. Use 𝑃(𝑏, 𝑐|𝑎𝑡, 𝑑𝑡, 𝑒𝑡), 𝑃(𝑏|𝑎𝑡, 𝑑𝑡, 𝑒𝑡), 𝑃(𝑐|𝑎𝑡, 𝑑𝑡, 𝑒𝑡) for the posterior probabilities computed in parts (a) and (b). Also use indicator functions such as 𝐼(𝑎, 𝑎𝑡) where

Place your answers in the corresponding blanks. You do NOT need to show your work.

𝑃(𝐵 = 𝑏|𝐴 = 𝑎) ← ____________________________________

𝑃(𝐷 = 𝑑|𝐴 = 𝑎, 𝐵 = 𝑏) ← _________________________________________

𝑃(𝐸 = 𝑒|𝐵 = 𝑏, 𝐶 = 𝑐) ← _________________________________________

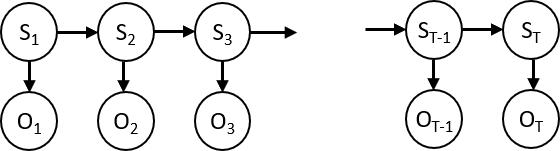

7. Hidden Markov Models (8 pts)

For all parts of this problem, show your work and justify each step in your derivation.

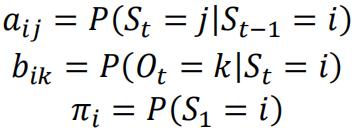

Consider a discrete hidden Markov Model (HMM) with the belief network shown below. Let S𝑡∈ {1,2, … ,n} denote the hidden state of the HMM at time t, and let 𝑂𝑡 ∈ {1,2, … , m} denote the observation at time t. As in class, let

denote the transition matrix, the emission matrix and the initial state distribution, respectively.

(a) Initial condition (4 pts)

Compute the probability 𝑃(𝑂1 = 𝑜1, 𝑆1 = 𝑖) in terms of the parameters {𝜋𝑖, 𝑎𝑖𝑗, 𝑏𝑖k} of the HMM.Show your work and justify each step.

(b)Forward algorithm (4pts)

Consider the probabilities𝛼𝑖𝑡 = 𝑃(𝑜1, 𝑜2, … , 𝑜𝑡, 𝑆𝑡 = 𝑖) for an observed sequence

{𝑜𝑡}𝑇𝑡=1.Derive an efficient recursion to compute these probabilities at time t+ 1 from those at time 𝑡 and theparameters {𝜋𝑖, 𝑎𝑖𝑗, 𝑏𝑖k} of the HMM. Show your work and justify each step.

更多代写:澳洲经济学代上网课 GMAT代考 澳洲留学生代考 北美assignment代写机构 北美HIS代写 专业代写费用